Problems and Technical Issues with Rosetta@home

Message boards : Number crunching : Problems and Technical Issues with Rosetta@home

Previous · 1 . . . 315 · 316 · 317 · 318 · 319 · 320 · 321 . . . 353 · Next

| Author | Message |

|---|---|

KaguraMea KaguraMeaSend message Joined: 17 Nov 18 Posts: 31 Credit: 1,418,303 RAC: 49 |

Im confused, since when i need to buy a License for Rosetta to let it run? It just means if someone want to use Rosetta for commercial purpose (i.e. a pharma using Rosetta to research protein folding for their new drug). BOINC runners don't need to care about this message since we're not getting research result (Baker Labs do) and it's not for commercial purpose either. |

KaguraMea KaguraMeaSend message Joined: 17 Nov 18 Posts: 31 Credit: 1,418,303 RAC: 49 |

I have found the majority of the Biology/Medicine projects except for Rosetta at Home and World Community Grid are simply not active. There's SiDock y'all know... |

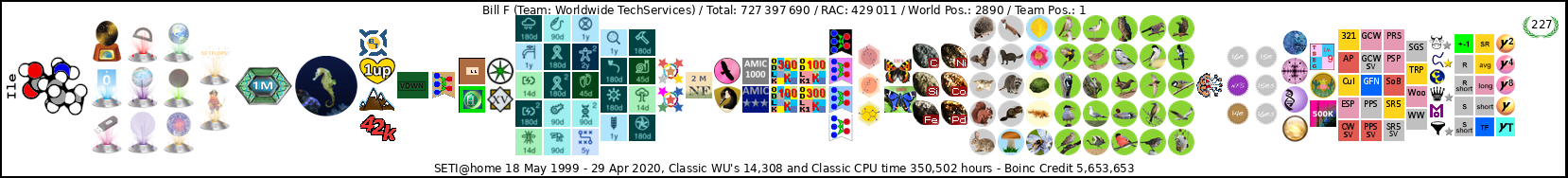

Bill F Bill FSend message Joined: 29 Jan 08 Posts: 61 Credit: 1,814,426 RAC: 1 |

Sid We joined about the same time but I have not been as focused on Rosetta as you have been ,,,, I have spread myself across a lot of projects .... But I favor Science based projects. Like you in for the long run, Bill F In October 1969 I took an oath to support and defend the Constitution of the United States against all enemies, foreign and domestic; There was no expiration date.

|

|

Sid Celery Send message Joined: 11 Feb 08 Posts: 2489 Credit: 46,551,772 RAC: 3,092 |

Sid 2 weeks apart, 17yrs ago. That must have been a very dull period of time for us both to arrive here. And I've only just noticed you have more credit from retired projects alone than I do from all mine. It's only recently I've found a 3rd live project worth running, even as a backup. I don't like to spread myself too thinly - Rosetta remains my #1 throughout.

|

|

Sid Celery Send message Joined: 11 Feb 08 Posts: 2489 Credit: 46,551,772 RAC: 3,092 |

Even with the documented issues on the Project Servers I have had enough tasks on three active systems to move up 547 positions on BOINCStats in the last 30 days. That zero failure rate from the last batch has made all the difference. We were down to ~150k tasks left when I looked earlier today and I've just taken a look at how close to zero we are. We aren't. The next batch has already arrived. 2.3m tasks showing on the front page - no downtime this week. Good news, whether by accident or design.

|

![View the profile of [VENETO] boboviz Profile](https://boinc.bakerlab.org/rosetta/img/head_20.png) [VENETO] boboviz [VENETO] bobovizSend message Joined: 1 Dec 05 Posts: 2129 Credit: 12,498,759 RAC: 4,880 |

2 weeks apart, 17yrs ago. That must have been a very dull period of time for us both to arrive here. We are getting old, my friend.. I don't like to spread myself too thinly - Rosetta remains my #1 throughout. +1 |

Greg_BE Greg_BESend message Joined: 30 May 06 Posts: 5770 Credit: 6,139,760 RAC: 0 |

I went away during the dry spell. But one of the projects has gone down for a undetermined amount of time. So here I am again. I see I missed out on the start of the new stuff since its down to 4,800+ left. Whats been going on here in the last 6 months or so? Is it still sporadic or did they find some stuff to keep us busy full time for a half year or so? |

|

Sid Celery Send message Joined: 11 Feb 08 Posts: 2489 Credit: 46,551,772 RAC: 3,092 |

I went away during the dry spell. But one of the projects has gone down for an undetermined amount of time. They haven't made it easy by any means, but we seem to have more uptime than downtime in the last few months. How long it will last, who can say, but if you lower your expectations a reasonable amount of work gets delivered here.

|

|

Bill Swisher Send message Joined: 10 Jun 13 Posts: 87 Credit: 62,408,235 RAC: 31,085 |

So here I am again...Whats been going on here in the last 6 months or so? If you decide to rejoin us, be aware of an ongoing problem with downloading work and the workaround (there's a long saga about this).

|

|

PMH_UK Send message Joined: 9 Aug 08 Posts: 24 Credit: 1,243,749 RAC: 0 |

The front page shows about 2,000,000 jobs queued currently. Server status shows about 5,000 released at a time. Paul. |

Greg_BE Greg_BESend message Joined: 30 May 06 Posts: 5770 Credit: 6,139,760 RAC: 0 |

I went away during the dry spell. But one of the projects has gone down for an undetermined amount of time. Ah! hey there Sid, well I am looking for something steady with like with a week of downtime. If they do it like last time and hand out small scraps once a month, then I am not going to be happy. |

Grant (SSSF) Grant (SSSF)Send message Joined: 28 Mar 20 Posts: 1899 Credit: 18,534,891 RAC: 0 |

If they do it like last time and hand out small scraps once a month, then I am not going to be happy.Then you won't be happy. Your cache is too big for Rosetta- 3 days or more for some work to be returned for one of your projects. Deadlines are still 3 days here. The larger your cache, the less likely you are to get work when it is available. As it is, there are generally a few small batches of work released each week, and there have been large batches of work released weekly lately, although prior to that it was every few weeks. Some batches a large percentage of Tasks error out, so it doesn't take long to chew through them. If you set your cache to 0.1 days and 0.01 days you would get work whenever it becomes available and your system would be kept busy with work from here or your other projects. But if you insist on having a large cache (ie anything larger than 0.5 days when attached to multiple projects), and expecting the number of cores/threads in use at any given time to relate to the resource share values you have, you will not be happy. So Rosetta is still not the project for you. Edit- oh, and the Validators only run for about half of the week. Grant Darwin NT |

|

Sid Celery Send message Joined: 11 Feb 08 Posts: 2489 Credit: 46,551,772 RAC: 3,092 |

If they do it like last time and hand out small scraps once a month, then I am not going to be happy.Then you won't be happy. I'm not sure that's a fair representation. Looking at the chart for Rosetta credit per day (last 60 days), tasks started coming through 40-50days ago (post-Xmas) in smaller batches but over the last 3 or 4 weeks we've been getting batches of 2-2.5million once per week every week - one of which, yes, had quite a high error rate, but certainly the most recent two have been error-free and lasted pretty much the whole week. The chart shows a weekly blip, which is where the validators go down and no credit awarded, but work was fully available over that period and credit was fully caught up after 2 days. 30-50 days may or may not be reliable enough for Greg, but it's a lot better than we had last year. And his cache looks absolutely fine to me for a 16-core machine. I'd have more to say about increasing his 6hr runtime to the now-default 8hrs

|

Greg_BE Greg_BESend message Joined: 30 May 06 Posts: 5770 Credit: 6,139,760 RAC: 0 |

If they do it like last time and hand out small scraps once a month, then I am not going to be happy.Then you won't be happy. Sid - Target CPU run time 6 hours Cache is ok. I run a bunch of other projects to keep the machine, mainly GPU stuff, but stuff like WCG and Einstein has been a favorite of mine for quite some time. |

|

Sid Celery Send message Joined: 11 Feb 08 Posts: 2489 Credit: 46,551,772 RAC: 3,092 |

If they do it like last time and hand out small scraps once a month, then I am not going to be happy.Then you won't be happy. I'm glad to see you've decided to come back and are running consistently. Looks good. Don't mind me about the 6/8hr runtime - I've seen a lot worse - but it does help eke out the tasks for both you and everyone else just that little bit longer, mainly because I'm not entirely confident of future supply, which has been one of your legitimate concerns too. It's Wednesday, so the validators are down yet again, but past form consistently says they'll return on Friday. No-one has any idea why this started happening while you've been away.

|

|

Bill Swisher Send message Joined: 10 Jun 13 Posts: 87 Credit: 62,408,235 RAC: 31,085 |

Cache is ok. I run a bunch of other projects to keep the machine, mainly GPU stuff, but stuff like WCG and Einstein has been a favorite of mine for quite some time. Mine all seem to think Rosetta will take 8 hours, even though (depending on the processor) it's usually a bit over 4. My cache(s) are set to "3days +.01". No GPU's, or they're unrecognized. I run WCG, Rosetta and DENIS. There is one computer that is opened to to let Einstein fight for the 4 cores available (plus one of the 16-thread machines allows it on 1 thread only, if at all).

|

Tom M Tom MSend message Joined: 20 Jun 17 Posts: 178 Credit: 36,299,045 RAC: 2 |

So far, 1 day cache has been keeping my system busy. I do run WCG at the same resource level. And so far, my system is still favoring R@H. Yes, i have 1 cpu thread running e@h on this cpu-only box. I am setup that if R@H stops sending tasks, WCG is "supposed" to take on all those CPU threads. I am pleased it hasn't been tested yet. :) Proud member of the O.F.A. (Old Farts Association) |

Tom M Tom MSend message Joined: 20 Jun 17 Posts: 178 Credit: 36,299,045 RAC: 2 |

Has anyone messaged Zari about the "boinc-process" server shutting down on a regular Wed through Friday schedule? Proud member of the O.F.A. (Old Farts Association) |

|

Jean-David Beyer Send message Joined: 2 Nov 05 Posts: 221 Credit: 7,572,744 RAC: 0 |

It is now 9am New York City time, and many of the server are down, as usual. Another week, and down again as usual.

|

|

Random Send message Joined: 10 Mar 24 Posts: 8 Credit: 123,481 RAC: 606 |

I get wu's that show up in tasks and transfers but they are stuck at 0. I have cleared all the tasks, no change. Updated the project, no change and finally reset the project with a computer restart. Its been like this for a month. Linux 7.18.1. What's going on? |

Message boards :

Number crunching :

Problems and Technical Issues with Rosetta@home

©2025 University of Washington

https://www.bakerlab.org